GPU interconnect: NVLink high-bandwidth interconnect, 3rd generation.

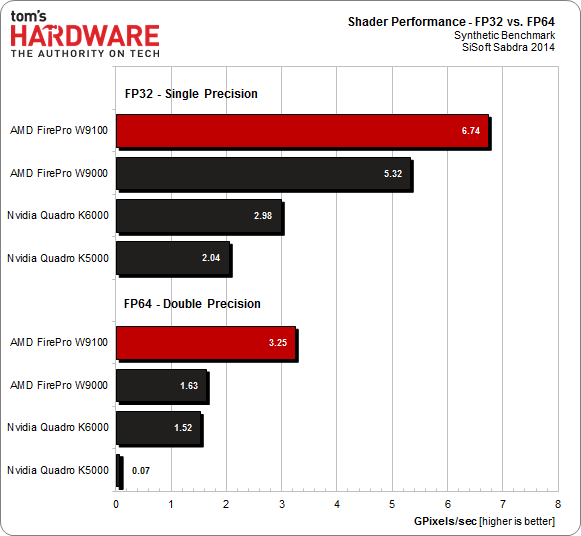

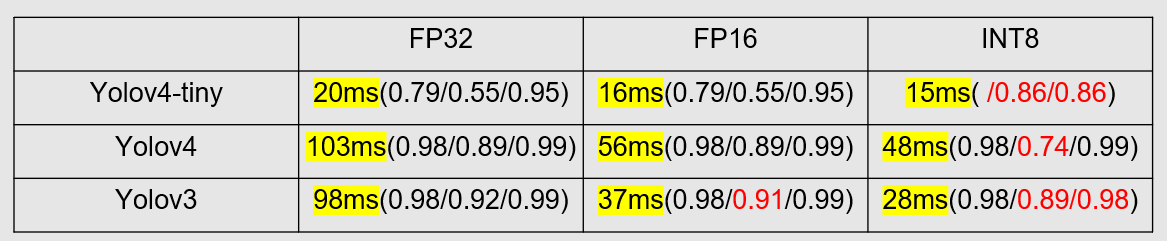

Supported precision types: FP64, FP32, FP16, INT8, BF16, TF32, Tensor Cores 3rd generation(mixed-precision).Now let’s take a look at each of these instances by family, generation and sizes in the order listed below. Each instance generation can have GPUs with different architecture and the timeline image below shows NVIDIA GPU architecture generations, GPU types and the corresponding EC2 instance generations. Higher the number, the newer the instance type is. The number next to the letter (P 3, G 5) represent the instance generation. All this will become clearer in the following section when we discuss specific GPU instance types.Įach instance size has a certain vCPU count, GPU memory, system memory, GPUs per instance, and network bandwidth. P instance type is still recommended for HPC workloads and demanding machine learning training workloads and I recommend G instance type for machine learning inference deployments and less compute intensive training. Today, the newer generation P and G instance types are both suited for machine learning. G instance types had GPUs better suited for graphics and rendering, characterized by their lack of double precision and lower cost/performance ratio (Lower wattage, smaller number of cuda cores).Īll this has started to change as the amount of machine learning workloads on GPUs are growing rapidly in recent years. Historically P instance type represented GPUs better suited for High-performance computing (HPC) workloads, characterized by their higher performance (higher wattage, more cuda cores) and support for double precision (FP64) used in scientific computing. A complete and unapologetically detailed spreadsheet of all AWS GPU instances and their featuresĪmazon EC2 GPU instances for deep learning.Which GPUs to consider for HPC use-cases?.

#Fp32 vs fp64 software#

What software and frameworks to use on AWS?.Cost optimization tips when using GPU instances for ML.

#Fp32 vs fp64 Pc#

Just a decade ago, if you wanted access to a GPU to accelerate your data processing or scientific simulation code, you’d either have to get hold of a PC gamer or contact your friendly neighborhood supercomputing center.

0 kommentar(er)

0 kommentar(er)